Environment & Agent

The 3D environment used for most experiments is Habitat, wrapped into a class called EnvironmentInterface. This class returns observations for given actions.

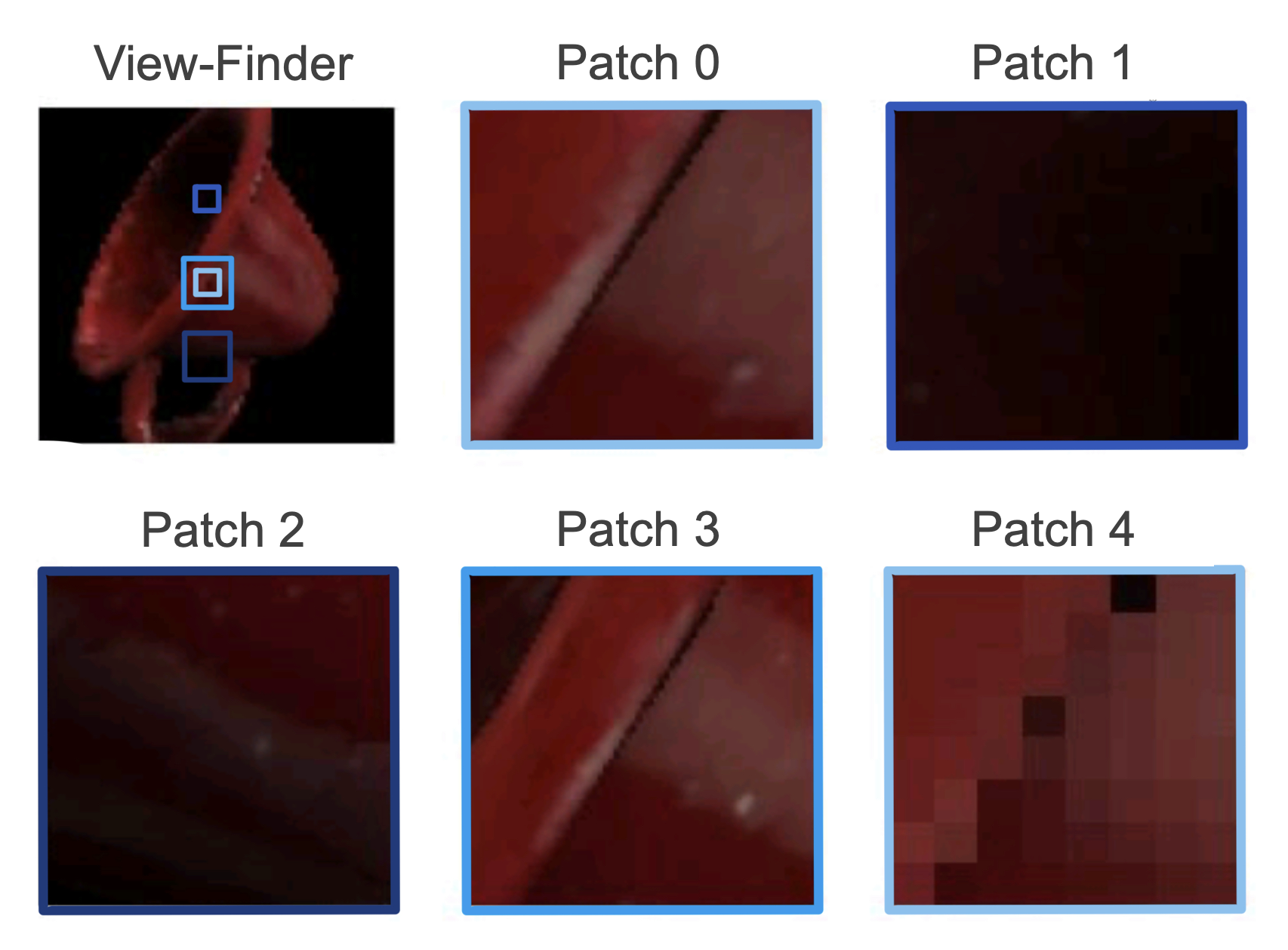

The environment is currently initialized with one agent that has N sensors attached to it using the src/tbp/monty/conf/experiment/config/environment/init_args/agents/patch_and_view_finder.yaml configuration. This config by default has two sensors. The first sensor is the sensor patch which will be used for learning. It is a camera, zoomed in 10x such that it only perceives a small patch of the environment. The second sensor is the view-finder which is at the same location as the patch and moves together with it but its camera is not zoomed in. This one is only used at the beginning of an episode to get a good view of the object (more details in the policy section) and for visualization, but not for learning or inference. The agent setup can also be customized to use more than one sensor patch (such as in src/tbp/monty/conf/experiment/config/monty/two_lm.yaml or src/tbp/monty/conf/experiment/config/monty/five_lm.yaml, see figure below). The configs also specify the type of sensor used, the features that are being extracted, and the motor policy used by the agent.

In principle, one could initialize multiple agents and connect them to the same Monty model but there is currently no policy to coordinate them. The difference between adding more agents vs. adding more sensors to the same agent is that all sensors connected to one agent have to move together (like neighboring patches on the retina) while separate agents can move independently like fingers on a hand (see figure below).

The currently most used environment is an empty space with a floor and one object (although we have started experimenting with multiple objects). The object can be initialized in different rotations, positions and scales but currently does not move during an episode. For objects, one can either use the default habitat objects (cube, sphere, capsule, ...) or the YCB object dataset (Calli et al., 2015), containing 77 more complex objects such as a cup, bowl, chain, or hammer, as shown in figure below. Currently there is no physics simulation so objects are not affected by gravity or touch and therefore do not move.

Of course, the EnvironmentInterface classes can also be customized to use different environments and setups as shown in the table below. We are not even limited to 3D environments and can for example let an agent move in 2D over an image such as when testing on the Omniglot dataset. The only crucial requirement is that we can use an action to retrieve a new, action-dependent, observation from which we can extract a pose.

For some examples of how to use Monty with other environments than Habitat (or even in the real world), see this tutorial.

| List of all environment interface classes | Description |

|---|---|

| EnvironmentInterface | Base environment interface class that implements basic iter and next functionalities and episode and epoch checkpoint calls for Monty to interact with an environment. |

| EnvironmentInterfacePerObject | Environment interface for testing on the environment with one object. After each epoch, it removes the previous object from the environment and loads the next object in the pose provided in the parameters. |

| InformedEnvironmentInterface | Environment interface that allows for input-driven, model-free policies (using the previous observation to decide the next action). It implements the find_good_view function and other helpers for the agent to stay on the object surface. It also supports jumping to a target state when driven by model-based policies, although this is a TODO to refactor "jumps" into motor_policies.py. |

| OmniglotEnvironmentInterface | Environment interface that wraps around the Omniglot dataset and allows movement along the stroke of the different characters. Has similar cycle_object structure as EnvironmentInterfacePerObject. |

| List of all environments | Description |

|---|---|

| HabitatEnvironment | Environment that initializes a 3D Habitat scene and allows interaction with it. |

| OmniglotEnvironment | Environment for the Omniglot dataset. This is originally a 2D image dataset but reformulated here as stroke movements in 2D space. |

We also have a prototype ObjectBehaviorEnvironment class in the monty_lab repository (object_behaviors/environment.py) for testing moving objects.

Help Us Make This Page Better

All our docs are open-source. If something is wrong or unclear, submit a PR to fix it!

Updated 19 days ago